Coles Group is a year into “reimagining” how it manages and treats data, setting up a new data management platform that it hopes will lead to faster insights and the unshackling of advanced analytics.

Head of data engineering and operations Richard Glew told the Databricks Data + AI Asia Pacific conference that “for Coles to stay as a leading supermarket in Australia”, it has “some work to do” to get its data in order.

“Data is a huge issue for us,” Glew said.

“We've got a lot of it, of various and variable quality. Just understanding where it is and what it is, is a challenge, and we have to go and retrofit a lot of that metadata back in as well.”

The retailer is in the process of setting up an enterprise data platform (EDP), which is “designed to be a universal data repository for effectively all the data that we want to share either internally or externally as an organisation”.

One of the keys to the project is that Coles is drawing a line - what Glew called “a separation of concerns” - between data management and the “things that we want to do with that data”.

“One of the conversations I have with people is, 'Is this [EDP] the singing, all dancing data thing that's going to give us reports and our machine learning?’ and the answer is no,” Glew said.

“It's just about data management. So we've really separated that concern apart where we just focus on the management, and then that is the foundation for pretty much everything else you'd want to do with data.”

The EDP is being set up as a repository of clean, real-time data that can be exposed to different parts of Coles Group that can self-serve their own needs, without needing to involve a central data function.

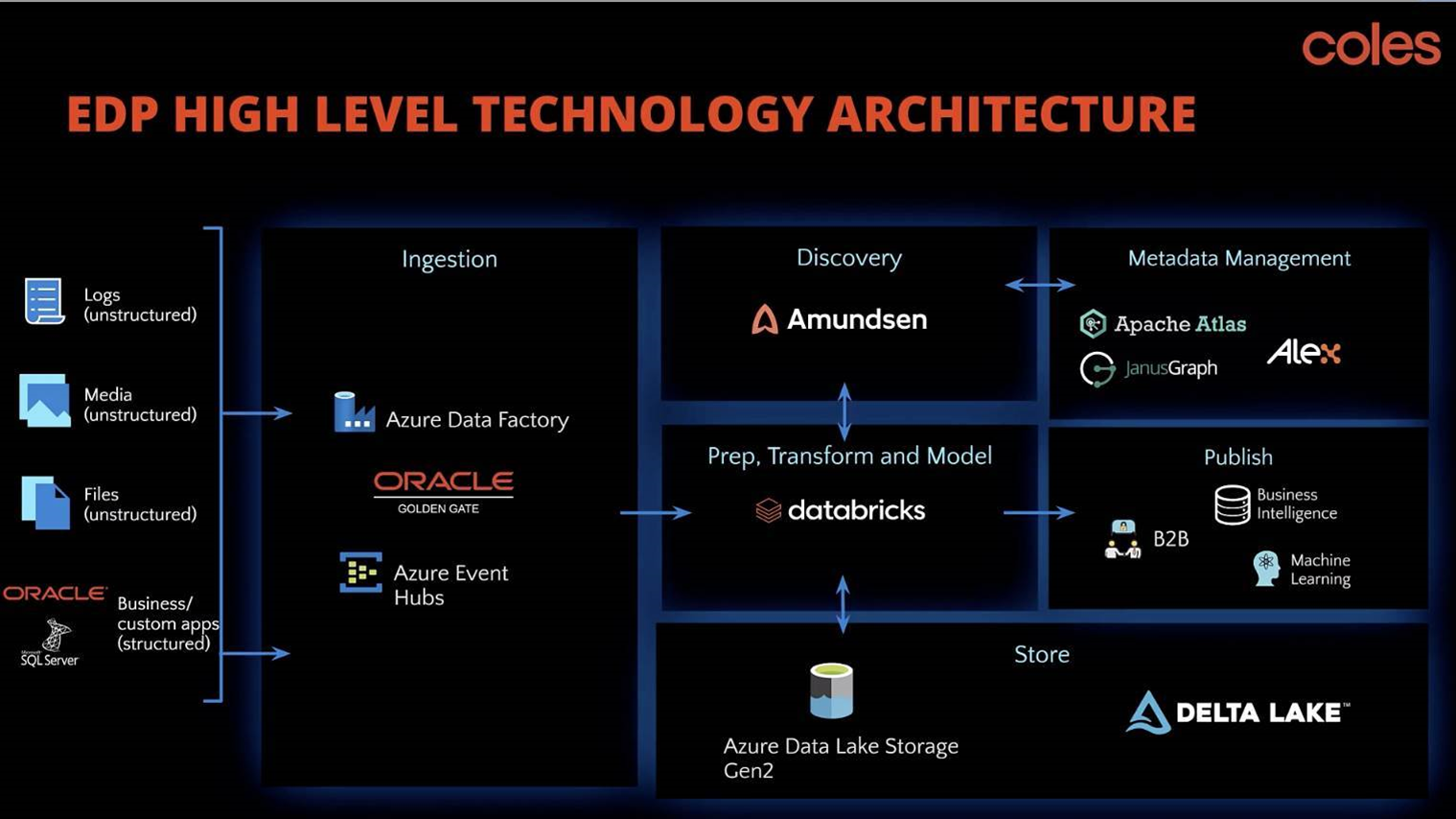

Though the EDP ultimately backs onto Azure - and makes use of some additional native Azure services to draw in data from source systems - its architecture is intentionally “open source friendly”, according to Glew.

“That doesn't mean that we're installing our own versions of everything and brewing our own stuff, but it does mean that we have the ability to move things around pretty easily,” he said.

The EDP will ultimately house both unstructured and structured data, though Glew noted there isn’t presently much unstructured information being brought in.

Mostly, the EDP is populated with “business app data, and other structured and semi-structured data”.

One of the largest chunks of data is coming in from what Glew describes as “pretty much the world's largest Oracle data warehouse”.

The retailer is using one of Oracle's products, Golden Gate, “to shift [those] very large amounts of data out of the on-prem [Oracle] platforms into Azure”.

Once data is in the EDP, Coles uses Databricks to prepare, transform and process it, and Lyft’s Amundsen open source software for data discovery, among other components.

Coles has advanced analytics and business intelligence teams already plugged into the EDP. The retailer’s business units and even some of its external partners are also able to access the platform.

Glew said he hoped the EDP would allow Coles to move faster in its push to embrace machine learning.

The retailer has not previously discussed much of its machine learning work, though it has looked at using algorithms in conjunction with other cameras to recognise out-of-stock items on shelves.

“The first thing [we want to improve by having the EDP] is just around our general execution - what are the things that we just can't do today in our current environment?” Glew said.

“No surprise to anybody here at this summit, but machine learning is very high on the agenda.

“We have a pretty sophisticated team of data scientists who do some pretty awesome work, and they're very limited - at least, that's what they keep telling me - by the environment that they have to work in today. So that's something we want to do something about.”

The retailer is also hoping to be able to move more quickly; being in the cloud should enable data users to avoid issues that come with hosting data infrastructure on-premises, such as whether there is enough compute and the need to encapsulate the work formally into a project structure.

“Our current environment, while it is secure, is secure in a way that doesn't necessarily make it easy to do things with our data quickly or easily,” Glew said.

“In the spirit of continuous improvement there's always things that we can do better.”

.png&h=140&w=231&c=1&s=0)

.jpg&h=140&w=231&c=1&s=0)